# standard imports

import matplotlib.pyplot as plt

import numpy as np

import random

import os

# pytorch imports

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision.transforms import ToTensor

from torchsummary import summaryCS 307: Week 13

# Download training data from open datasets.

training_data = datasets.KMNIST(

root="data",

train=True,

download=True,

transform=ToTensor(),

)

# Download test data from open datasets.

test_data = datasets.KMNIST(

root="data",

train=False,

download=True,

transform=ToTensor(),

)# Define batch size

batch_size = 64

# Create data loaders.

train_dataloader = DataLoader(training_data, batch_size=batch_size)

test_dataloader = DataLoader(test_data, batch_size=batch_size)for X, y in test_dataloader:

print(f"Shape of X [N, C, H, W]: {X.shape}")

print(f"Shape of y: {y.shape} {y.dtype}")

breakShape of X [N, C, H, W]: torch.Size([64, 1, 28, 28])

Shape of y: torch.Size([64]) torch.int64# Get a batch of training data

batch = next(iter(train_dataloader))

images, labels = batch

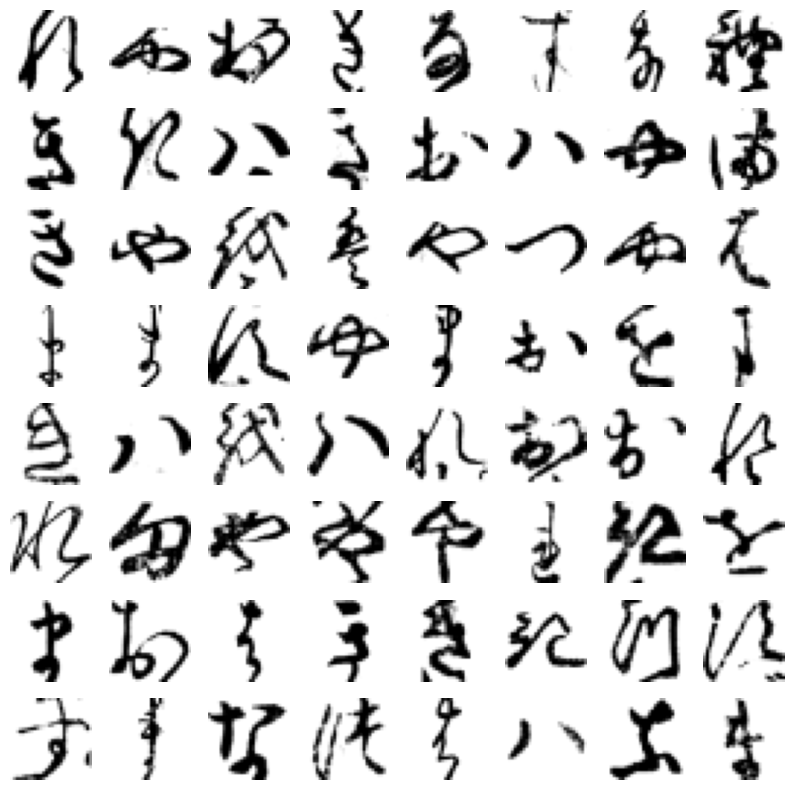

# Plot the first batch of images

fig, axs = plt.subplots(8, 8, figsize=(10, 10))

for i in range(8):

for j in range(8):

axs[i, j].set_axis_off()

axs[i, j].imshow(images[i * 8 + j].squeeze(), cmap=plt.cm.gray_r)

plt.show()

# Get cpu, gpu or mps device for training

device = (

"cuda" if torch.cuda.is_available() else "mps" if torch.backends.mps.is_available() else "cpu"

)

print(f"Using {device} device")Using mps device# Define model

class NeuralNetwork(nn.Module):

def __init__(self):

super().__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28 * 28, 512), nn.ReLU(), nn.Linear(512, 512), nn.ReLU(), nn.Linear(512, 10)

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

model_nn = NeuralNetwork().to(device)

print(model_nn)NeuralNetwork(

(flatten): Flatten(start_dim=1, end_dim=-1)

(linear_relu_stack): Sequential(

(0): Linear(in_features=784, out_features=512, bias=True)

(1): ReLU()

(2): Linear(in_features=512, out_features=512, bias=True)

(3): ReLU()

(4): Linear(in_features=512, out_features=10, bias=True)

)

)# Define model

class ConvNet(nn.Module):

def __init__(self):

super().__init__()

self.conv_stack = nn.Sequential(

nn.Conv2d(1, 32, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Conv2d(32, 64, kernel_size=3, padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2),

nn.Flatten(),

nn.Linear(64 * 7 * 7, 10),

)

def forward(self, x):

logits = self.conv_stack(x)

return logits

model_cnn = ConvNet().to(device)

print(model_cnn)ConvNet(

(conv_stack): Sequential(

(0): Conv2d(1, 32, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): ReLU()

(2): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(3): Conv2d(32, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): ReLU()

(5): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False)

(6): Flatten(start_dim=1, end_dim=-1)

(7): Linear(in_features=3136, out_features=10, bias=True)

)

)# Define loss function

loss_fn = nn.CrossEntropyLoss()

# Define optimizers

optimizer_nn = torch.optim.SGD(model_nn.parameters(), lr=1e-3)

optimizer_cnn = torch.optim.SGD(model_cnn.parameters(), lr=1e-3)def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

model.train()

for batch, (X, y) in enumerate(dataloader):

X, y = X.to(device), y.to(device)

# Compute prediction error

pred = model(X)

loss = loss_fn(pred, y)

# Backpropagation

loss.backward()

optimizer.step()

optimizer.zero_grad()

if batch % 100 == 0:

loss, current = loss.item(), (batch + 1) * len(X)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")def test(dataloader, model, loss_fn):

size = len(dataloader.dataset)

num_batches = len(dataloader)

model.eval()

test_loss, correct = 0, 0

with torch.no_grad():

for X, y in dataloader:

X, y = X.to(device), y.to(device)

pred = model(X)

test_loss += loss_fn(pred, y).item()

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss /= num_batches

correct /= size

print(f"Test Error: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")epochs = 5

for t in range(epochs):

print(f"Epoch {t+1}\n-------------------------------")

train(train_dataloader, model_nn, loss_fn, optimizer_nn)

test(test_dataloader, model_nn, loss_fn)

print("Done!")Epoch 1

-------------------------------

loss: 2.313945 [ 64/60000]

loss: 2.301249 [ 6464/60000]

loss: 2.301835 [12864/60000]

loss: 2.281255 [19264/60000]

loss: 2.285309 [25664/60000]

loss: 2.281746 [32064/60000]

loss: 2.291071 [38464/60000]

loss: 2.267421 [44864/60000]

loss: 2.271816 [51264/60000]

loss: 2.269926 [57664/60000]

Test Error:

Accuracy: 21.0%, Avg loss: 2.274607

Epoch 2

-------------------------------

loss: 2.271723 [ 64/60000]

loss: 2.258649 [ 6464/60000]

loss: 2.252648 [12864/60000]

loss: 2.236372 [19264/60000]

loss: 2.228143 [25664/60000]

loss: 2.233182 [32064/60000]

loss: 2.249004 [38464/60000]

loss: 2.193489 [44864/60000]

loss: 2.205827 [51264/60000]

loss: 2.207435 [57664/60000]

Test Error:

Accuracy: 30.6%, Avg loss: 2.231061

Epoch 3

-------------------------------

loss: 2.212894 [ 64/60000]

loss: 2.194100 [ 6464/60000]

loss: 2.178476 [12864/60000]

loss: 2.163684 [19264/60000]

loss: 2.134563 [25664/60000]

loss: 2.153082 [32064/60000]

loss: 2.181096 [38464/60000]

loss: 2.071425 [44864/60000]

loss: 2.096304 [51264/60000]

loss: 2.103439 [57664/60000]

Test Error:

Accuracy: 34.9%, Avg loss: 2.157738

Epoch 4

-------------------------------

loss: 2.113696 [ 64/60000]

loss: 2.081592 [ 6464/60000]

loss: 2.051588 [12864/60000]

loss: 2.044624 [19264/60000]

loss: 1.974878 [25664/60000]

loss: 2.017395 [32064/60000]

loss: 2.064107 [38464/60000]

loss: 1.886411 [44864/60000]

loss: 1.926542 [51264/60000]

loss: 1.937511 [57664/60000]

Test Error:

Accuracy: 39.7%, Avg loss: 2.048972

Epoch 5

-------------------------------

loss: 1.960853 [ 64/60000]

loss: 1.916072 [ 6464/60000]

loss: 1.874484 [12864/60000]

loss: 1.890733 [19264/60000]

loss: 1.752843 [25664/60000]

loss: 1.829327 [32064/60000]

loss: 1.904842 [38464/60000]

loss: 1.677428 [44864/60000]

loss: 1.726264 [51264/60000]

loss: 1.740170 [57664/60000]

Test Error:

Accuracy: 44.7%, Avg loss: 1.933193

Done!epochs = 5

for t in range(epochs):

print(f"Epoch {t+1}\n-------------------------------")

train(train_dataloader, model_cnn, loss_fn, optimizer_cnn)

test(test_dataloader, model_cnn, loss_fn)

print("Done!")Epoch 1

-------------------------------

loss: 2.314611 [ 64/60000]

loss: 2.294735 [ 6464/60000]

loss: 2.291432 [12864/60000]

loss: 2.271538 [19264/60000]

loss: 2.240789 [25664/60000]

loss: 2.235340 [32064/60000]

loss: 2.230359 [38464/60000]

loss: 2.154369 [44864/60000]

loss: 2.134482 [51264/60000]

loss: 2.080340 [57664/60000]

Test Error:

Accuracy: 41.1%, Avg loss: 2.140463

Epoch 2

-------------------------------

loss: 2.100650 [ 64/60000]

loss: 2.012050 [ 6464/60000]

loss: 1.919635 [12864/60000]

loss: 1.871289 [19264/60000]

loss: 1.604763 [25664/60000]

loss: 1.600619 [32064/60000]

loss: 1.627111 [38464/60000]

loss: 1.303081 [44864/60000]

loss: 1.269283 [51264/60000]

loss: 1.271949 [57664/60000]

Test Error:

Accuracy: 51.6%, Avg loss: 1.629555

Epoch 3

-------------------------------

loss: 1.391366 [ 64/60000]

loss: 1.183622 [ 6464/60000]

loss: 1.148979 [12864/60000]

loss: 1.142677 [19264/60000]

loss: 0.816237 [25664/60000]

loss: 0.961087 [32064/60000]

loss: 1.082015 [38464/60000]

loss: 0.902743 [44864/60000]

loss: 0.813347 [51264/60000]

loss: 0.971998 [57664/60000]

Test Error:

Accuracy: 57.8%, Avg loss: 1.365150

Epoch 4

-------------------------------

loss: 1.062371 [ 64/60000]

loss: 0.904881 [ 6464/60000]

loss: 0.901479 [12864/60000]

loss: 0.869897 [19264/60000]

loss: 0.607301 [25664/60000]

loss: 0.752270 [32064/60000]

loss: 0.876258 [38464/60000]

loss: 0.805358 [44864/60000]

loss: 0.647347 [51264/60000]

loss: 0.876209 [57664/60000]

Test Error:

Accuracy: 62.3%, Avg loss: 1.243965

Epoch 5

-------------------------------

loss: 0.929853 [ 64/60000]

loss: 0.815947 [ 6464/60000]

loss: 0.798895 [12864/60000]

loss: 0.783030 [19264/60000]

loss: 0.517595 [25664/60000]

loss: 0.662404 [32064/60000]

loss: 0.793572 [38464/60000]

loss: 0.765405 [44864/60000]

loss: 0.570984 [51264/60000]

loss: 0.824758 [57664/60000]

Test Error:

Accuracy: 64.3%, Avg loss: 1.169368

Done!# save the neural network model

torch.save(model_nn.state_dict(), "model_nn.pth")

print(f"Size of model_nn.pth: {os.path.getsize('model_nn.pth')} bytes")

# save the convolutional neural network model

torch.save(model_cnn.state_dict(), "model_cnn.pth")

print(f"Size of model_cnn.pth: {os.path.getsize('model_cnn.pth')} bytes")Size of model_nn.pth: 2681682 bytes

Size of model_cnn.pth: 203548 bytes